Without effective compression, most local area networks (LANs) transporting video data would grind to a halt, so the right compression format is crucial.

Without effective compression, most local area networks (LANs) transporting video data would grind to a halt, so the right compression format is crucial.

Image and video compression can be either lossless or lossy. In lossless compression, such as GIF, you get an identical image after decompression. The price is that the compression ratio, ie, the data reduction is limited. These formats are impractical for use in network video solutions, so several lossy compression methods and standards have been developed. The idea is to reduce things that the human eye does not notice and thereby increase the compression ratio tremendously.

Compression methods also adopt two different approaches: still image compression and video compression.

Still image compression standards

Still image compression focuses on one picture at a time. The most well known example is JPEG, originally standardised in the mid-1980s by the Joint Photographic Experts Group. JPEG viewing can be done from standard web browsers. JPEG compression can be done at different user-defined compression levels. The compression level selected is directly related to the image quality requested, and the compression achieved also depends on the image itself: the more complex and irregular the image is the larger the resultant file will be. The two images illustrate compression ratio versus image quality for a given scene at two different compression levels.

JPEG2000 was developed by the same group as JPEG, mainly for use in medical and photographic applications. At high compression ratios it performs slightly better than JPEG, but in web browsers and other applications is still limited.

Video compression standards

Motion JPEG (M-JPEG) is the commonest standard in network video. A network camera captures individual images and compresses them into JPEG format. At frame rates of about 16 fps and above, the viewer perceives full motion video from a sequence of JPEG images. This is referred to as M-JPEG. Each individual image has the same quality, determined by the compression level chosen for the network camera or video server.

H.263 compression on the other hand targets a fixed bit rate. The downside is that when an object moves, the quality of the image decreases. H.263 was originally designed for video conferencing applications and not for surveillance where details are more crucial than fixed bit rate.

One of the best-known audio and video streaming techniques is MPEG. MPEG's basic principle is to compare successive compressed images to be transmitted. The first compressed image is used as a reference frame, and only parts of the following images that differ from the reference image are sent. The network viewing station reconstructs images based on the reference image and the 'difference data'. Despite higher complexity, MPEG video compression leads to lower data volumes than M-JPEG.

MPEG is in practice more complex, often involving additional techniques such as prediction of motion in a scene and identifying objects. MPEG-1 aims to keep the bit-rate relatively constant at the expense of a varying image quality, typically comparable to VHS video quality with a frame locked at 25 (PAL)/30 (NTSC) fps. MPEG-2 extended MPEG-1 to cover larger pictures and higher quality at the expense of a lower compression ratio and higher bit-rate, also locked at 25/30 fps.

MPEG-4 is a major development with many more tools to lower the bit-rate for a given image quality and application, and frame rate is not locked. However, most of the tools require so much processing power that they are impractical for network video.

Advanced Video Coding (AVC) is expected within the next years to replace H.263 and MPEG-4.

Advantages and disadvantages of different standards

M-JPEG is simple and widely supported. It introduces little limited delay (ie low latency) and is therefore also suitable for image processing, such as in video motion detection or object tracking. Any practical image resolution, from mobile phone display size (QVGA) up to full video (4CIF) image size and above (megapixel), is available with consistent image quality at the chosen compression ratio. The frame rate can be adjusted to limit bandwidth usage, but M-JPEG nonetheless generates a relatively large volume of image data.

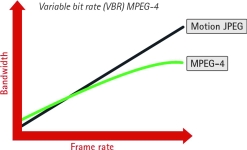

MPEG has the advantage of lower data volumes than M-JPEG, except at low frame rates as described below. If the available network bandwidth is limited or high frame rates with restricted storage space restraints, MPEG may be the preferred. It provides a relatively high image quality at a lower bit-rate (bandwidth usage). However, higher complexity in encoding and decoding leads to higher latency. Note too that MPEG-2 and MPEG-4 are subject to licensing fees. The graph below compares bandwidth use for M-JPEG and MPEG-4 for a given image scene with motion. At lower frame rates, where MPEG-4 compression cannot make extensive use of similarities between neighbouring frames, and due to the overhead generated by the MPEG-4 streaming format, the bandwidth needed is similar to M-JPEG.

Does one compression standard fit all?

Here are the points to consider when choosing a compression standard:

* What frame rate is required?

* Is the same frame rate needed at all times?

* Is recording/monitoring needed at all times, or only on motion/event?

* For how long must the video be stored?

* What resolution is required?

* What image quality is required?

* What level of latency is acceptable?

* How robust/secure must the system be?

* What is the available network bandwidth?

* What is the budget for the system?

For more information contact Roy Alves, Axis Africa, 011 548 6780, [email protected]

© Technews Publishing (Pty) Ltd. | All Rights Reserved.